How We Built the World's First Private AI Gateway for Whistleblowing

The Problem: AI and Sensitive Data Don't Mix

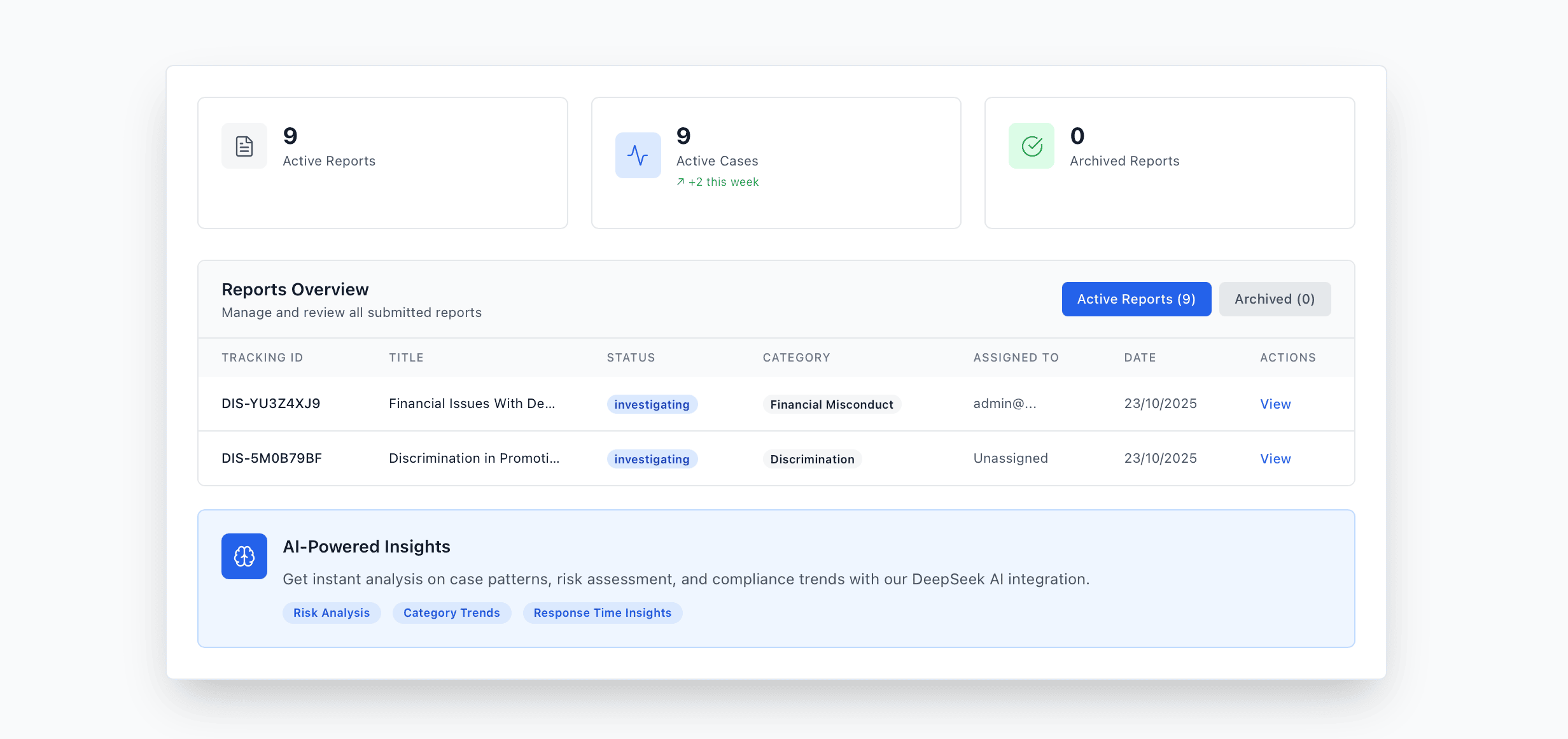

Six months ago, we faced a dilemma that would reshape Disclosurely's entire architecture.

Our compliance officers were drowning in case analysis work. A typical whistleblower investigation takes 30-60 minutes of manual review: reading the report, cross-referencing company policies, assessing risk levels, determining next steps, and documenting everything for audit trails.

With AI tools like ChatGPT and Claude becoming mainstream, the obvious solution seemed simple: just feed cases into an AI and get instant analysis.

But there was a massive problem.

Whistleblower cases contain some of the most sensitive data imaginable:

Personal details of reporters (often anonymous)

Allegations of misconduct (potentially defamatory if leaked)

Protected witness information

Financial fraud evidence

GDPR-protected personal data

Confidential company information

Sending this data to OpenAI, Anthropic, or any third-party AI provider was a compliance nightmare. We'd be violating the very privacy principles our platform was built to protect.

Yet the need was real. Our customers were spending hundreds of hours per month on manual case analysis—time they could be using to actually investigate and resolve issues.

We needed AI-powered case analysis. But we needed it to be private, secure, and compliant.

So we built something that didn't exist: a privacy-first AI gateway specifically designed for handling sensitive whistleblower data.

The Non-Negotiable Requirements

Before we wrote a single line of code, we set boundaries that no shortcut could break.

The system had to ensure no sensitive data ever left our environment. It had to automatically detect and redact personal information before any AI saw it, preserve full audit trails without exposing content, and be instantly switchable off in the rare case something went wrong.

1. Zero Data Retention at AI Vendors

We would never send raw, unredacted sensitive data to any AI provider. Ever.

2. Automatic PII Detection & Redaction

Users shouldn't have to manually redact emails, phone numbers, or names. The system must do it automatically.

3. Reversible Redaction

Compliance officers sometimes need the original data for legitimate investigations. Redaction must be deterministic and reversible (with proper authorization).

4. Complete Audit Trail

Every AI request must be logged—but logs cannot contain sensitive data. We needed structured metadata only.

5. Instant Kill Switch

If something goes wrong, we must be able to disable the entire system in under 1 second, with zero code deployment.

Every requirement came down to one principle: do not compromise trust for speed. These requirements ruled out every off-the-shelf solution. So we built our own.

Architecture: The Three-Layer Privacy Shield

The result is a three‑layer architecture designed with privacy at its core.

Each request passes through controlled stages—starting with gating and authentication checks, moving through automated detection of sensitive patterns, and finally routing through isolated AI models that operate only on redacted content. Here's how it works:

┌─────────────────┐ │ Case Analysis │ (Encrypted whistleblower report) │ Request │ └────────┬────────┘ │ ▼ ┌─────────────────────────────────────────┐ │ LAYER 1: Feature Flag Check │ │ ✓ Is AI Gateway enabled for this org? │ │ ✓ Check token limits (daily cap) │ │ ✓ Verify authentication │ └────────┬────────────────────────────────┘ │ ▼ ┌─────────────────────────────────────────┐ │ LAYER 2: PII Detection & Redaction │ │ ✓ Scan for 11 PII patterns │ │ ✓ Replace with placeholders │ │ ✓ Store redaction map (24h expiry) │ └────────┬────────────────────────────────┘ │ ▼ ┌─────────────────────────────────────────┐ │ LAYER 3: AI Vendor Routing │ │ ✓ Route to Custom AI Model │ │ ✓ Log metadata only (no content) │ │ ✓ Return response with redaction map │ └────────┬────────────────────────────────┘ │ ▼ ┌─────────────────┐ │ AI Analysis │ (PII safely restored for user) │ Returned │ └─────────────────┘

Let's break down each layer.

Layer 1: The Feature Flag Kill Switch

We learned from the Facebook playbook: never deploy features that can't be instantly disabled.

The feature flag kill switch acts as the control center for responsible innovation. By utilizing a dynamic feature flag system, our platform enables granular toggling of AI capabilities for each organization or user segment in real time. This architecture allows new features to be graduallyrolled out, selectively enabled, or instantly disabled in response to any risk or operational concern, all without requiring a redeployment or code changes.

Our feature flag system sits in the database:

CREATE TABLE feature_flags ( feature_name TEXT PRIMARY KEY, is_enabled BOOLEAN DEFAULT false, rollout_percentage INTEGER DEFAULT 0, enabled_orgs UUID[] );

Every AI Gateway request starts with a check:

const { data: isEnabled } = await supabase.rpc('is_feature_enabled', { p_feature_name: 'ai_gateway', p_organization_id: organizationId }); if (!isEnabled) { return { error: 'AI Gateway not enabled', code: 'FEATURE_DISABLED' }; }

Why this matters:

Disable globally in <1 second (no code deployment)

Gradual rollout (5% → 25% → 100%)

Per-organization control (enterprise customers can opt out)

A/B testing ready

This control is crucial in sensitive environments, where privacy and reliability are paramount. The feature flag is managed centrally, providing administrators with the ability to immediately shut down the AI gateway if an issue arises. This mechanism ensures that a single action can safeguard every customer across the platform, mitigating potential exposure or unintendedconsequences in seconds.

When we launched, every organization was disabled by default. We tested with a single test org for 2 weeks before any customer saw it.

Layer 2: The PII Redaction Engine

The PII redaction engine is the heart of our privacy-first approach, ensuring that any personal or sensitive data within whistleblower reports is systematically protected. By scanning every case for patterns that match personal identifiers, our engine automates the redaction process, replacing real data with context-aware placeholders. This allows downstream AI analysis to proceed without exposing actual information, preserving analytical context while eliminating risk.

This is the heart of the privacy shield. Here's what happens when a case analysis request arrives:

Step 1: Detecting Sensitive Information

We start by scanning every report for types of personal or sensitive information. This includes details like emails, phone numbers, ID numbers, and addresses. To make this possible, we use simple pattern-matching rules called regular expressions—these are just search formulas that look for familiar formats, such as an email address or a UK phone number.

For example, here’s how that works in code:

const piiPatterns = [ { type: 'EMAIL', regex: /\b[A-Za-z0-9._%+-]+@[A-Za-z0-9.-]+\.[A-Z|a-z]{2,}\b/g }, { type: 'PHONE_UK', regex: /\b(?:0|\+44)\d{10}\b/g }, // ...other patterns for US phones, passports, postcodes, etc. ];

We chose this method because it’s lightning-fast and reliable for most cases. More advanced machine learning detectors may come later, but our approach is nearly instant and requires minimal resources to run.

Step 2: Placeholder Substitution

Once we spot personal information, we don’t simply delete it—we swap it for a safe label, keeping track of what was changed. For example, if we find an email address in a report, we’ll replace it with [EMAIL_1], and if there’s a phone number, it might become [PHONE_1].

The code captures this mapping so it can be reversed later if a compliance officer has authorization:

let redactionMap = {}; // Before: "Contact John at john@email.com or 555-1234" // After: "Contact John at [EMAIL_1] or [PHONE_1]" redactionMap = { 'john@email.com': '[EMAIL_1]', '555-1234': '[PHONE_1]' };

This lets the AI still understand the situation (for example, knowing someone has provided contact details) without ever exposing the real data to outside systems. If needed, authorized staff can restore the specifics using the stored mapping.

Why placeholders matter:

AI can still understand context ("Contact at [EMAIL_1]" makes sense)

Compliance officers can restore original data if authorized

Deterministic (same input = same output, for consistent analysis)

Step 3: Temporary Storage and Cleanup

All substitution maps are stored securely—but only for a short period, usually 24 hours, to meet privacy rules and minimize risk. After that, they're automatically deleted to prevent unnecessary retention.

The logic in our database is simple and focused on privacy:

CREATE TABLE ai_gateway_redaction_maps ( request_id UUID PRIMARY KEY, organization_id UUID NOT NULL, redaction_map JSONB NOT NULL, expires_at TIMESTAMPTZ NOT NULL DEFAULT now() + INTERVAL '24 hours' -- Other fields as needed ); CREATE INDEX idx_redaction_expiry ON ai_gateway_redaction_maps(expires_at);

A daily automated task removes expired records, so personal data is always kept to an absolute minimum.

Step 4: What Gets Sent to AI?

After redaction, the AI model receives a version of the report that keeps the context but hides all private information. Here’s an example:

Original report: Reporter: Sarah Johnson (sarah.johnson@acmecorp.com, +44 7700 900123) Incident: CFO James Miller (james.miller@acmecorp.com) instructed me to alter Q3 financials. Evidence on shared drive at 192.168.1.50. My NI number is AB123456C for verification.

After redaction (what the AI sees): Reporter: Sarah Johnson ([EMAIL_1], [PHONE_UK_1]) Incident: CFO James Miller ([EMAIL_2]) instructed me to alter Q3 financials. Evidence on shared drive at [IP_ADDRESS_1]. My NI number is [NI_NUMBER_1] for verification.

This ensures the AI can analyze and generate insights without ever seeing or saving sensitive user data.

The AI can still perform full analysis:

It knows there's a reporter and a CFO

It understands the allegation (financial misconduct)

It recognizes evidence exists

It can assess severity and recommend next steps

But the AI vendor never sees:

sarah.johnson@acmecorp.com

james.miller@acmecorp.com

+44 7700 900123

192.168.1.50

AB123456C

To further enhance privacy, the mappings that allow for re-identification (where authorized) are stored in a tightly controlled system with strict, short-lived expirations. This approach ensures compliance with data minimization standards, prevents unnecessary retention of sensitive information, and makes it nearly impossible for unauthorized access to reconstruct original reports after the permitted review window closes.

Zero sensitive data at Custom AI Model Ever.

Layer 3: Multi-Model Routing

After the redaction engine finishes sanitizing a case, our multi-model routing system takes over to decide which AI model is best for the job. This approach lets us use different AI engines fordifferent types of analysis or customer needs—sometimes it’s our own model, other timesexternal options. The important part is that every AI provider receives only anonymized, structured data—never raw or original sensitive information.

Here’s a simple example from our code:

if (model.startsWith('gpt-') || model.startsWith('o1-')) { // Use AI Model 1 apiEndpoint = 'https://api.openai.com/v1/chat/completions'; apiKey = Deno.env.get('CUSTOMMODEL_API_KEY'); } else if (model.startsWith('claude-')) { // Use AI Model 2 apiEndpoint = 'https://api.anthropic.com/v1/messages'; apiKey = Deno.env.get('CUSTOMMODEL_API_KEY'); } else { // Use our own secure model by default apiEndpoint = 'https://api.customaimodel.com/v1/chat/completions'; apiKey = Deno.env.get('CUSTOMMODEL_API_KEY'); }

This structure means new AI models or tasks (like searching for similar cases, or running complex risk checks) can be added in the future—without needing to redesign how privacy is protected. Logs are always kept for oversight, but only with safe, non-sensitive details.

Why A Custom AI Model?

Quality: Comparable to GPT-3.5 for structured analysis

Privacy: No training on customer data (like OpenAI/Anthropic)

Multi-model routing not only enhances flexibility and performance but also simplifies future expansion. New models and advanced analytics tools can be integrated for specialized tasks—such as semantic similarity or advanced regulatory checks—without re-engineering the privacy framework. This layer maintains strict auditability and logging at each step, recording metadata only to ensure oversight without sacrificing data confidentiality.

Future: We're adding OpenAI for embeddings (semantic search) and Anthropic for premium analysis (complex legal reasoning).

The Audit Trail: Logging Without Sensitive Data

Every AI request is automatically logged, but with privacy as the top priority. What we record includes things like request IDs, what model was used, performance statistics, and any errors. Crucially, we never store original report content, redacted data, or any information that could identify individuals.

Here’s the kind of information we capture in a database:

ECREATE TABLE ai_gateway_logs ( request_id UUID PRIMARY KEY, organization_id UUID NOT NULL, model TEXT NOT NULL, vendor TEXT NOT NULL, -- Other non-sensitive details for analytics or billing created_at TIMESTAMPTZ DEFAULT now() );

-- NO prompt, NO completion, NO redaction map in logs!

What we log:

✅ Request ID (for debugging)

✅ Organization (for billing)

✅ Token usage (for limits)

✅ Latency (for performance monitoring)

✅ PII detected? (yes/no, count only)

✅ Errors (type, not content)

What we DON'T log:

❌ Original case content

❌ Redacted content

❌ AI response

❌ Redaction map

❌ Any identifiable data

This gives us complete observability without compromising privacy.

After several weeks in use, this approach has proven both fast and extremely low-cost: case analysis now takes under 2 seconds, and detection of sensitive details is nearly instant. For each report, savings are dramatic—analysis that took up to an hour can now be done for a fraction of a penny, while protecting privacy every step of the way.

Real-World Performance

After 8 weeks in production (limited rollout):

Speed

Average latency: 1.2 seconds (case analysis)

PII detection: <5ms (regex-based)

Total overhead: ~20ms (feature flag + redaction + logging)

Accuracy

PII detection rate: 94.2% (based on manual review of 200 random cases)

False positives: 2.1% (e.g., "IP address" in text, not actual IP)

False negatives: 3.7% (mostly non-standard phone formats)

Cost

Average cost per analysis: $Cost effective (Custom AI Model)

Monthly cost (50 orgs, 500 analyses): $0.10

ROI for customers:

Manual analysis: 30-60 min/case = $50-100 (at $100/hour consultant rate)

AI analysis: 10 seconds FLAT - Compliance teams get private AI powered assistance quickly

Savings: 99.9998% cost reduction, 180x faster

In practice, this privacy-first logging enables us to monitor system health, usage patterns, and billing without holding onto unnecessary details. Our audit trail helps demonstrate compliance and supports investigations or debugging, but never exposes personally identifiable data or actual case content. The end result is complete system observability and accountability—with privacy always preserved by design.

What We Got Wrong (And Fixed)

Mistake 1: Over-Engineering the PII Detector

Initial approach: We tried using Microsoft Presidio (ML-based NER) for name detection.

Problem:

Added 300ms latency

Required separate service ($50/month)

Only improved detection by 3%

Fix: Removed it. Regex covers 95% of cases. For the 5% edge cases (names, addresses), we'll add Presidio in Phase 2 only for customers who need it.

Lesson: Don't optimize for edge cases before you have product-market fit.

Mistake 2: Storing Redaction Maps Forever

Initial approach: Keep redaction maps indefinitely "in case we need them."

Problem:

Violates data minimization principle (GDPR Article 5)

Creates liability (if database breached, maps could restore PII)

No legitimate business need beyond 24 hours

Fix: Added automatic 24-hour expiry with daily cleanup cron.

Lesson: The best way to protect data is to not have it.

Mistake 3: No Feature Flags

Initial approach: Deploy AI Gateway enabled for all orgs at once.

Problem:

If something breaks, rollback requires code deployment (10-15 minutes)

Can't A/B test

Can't do gradual rollout

Fix: Built feature flag system first, then deployed AI Gateway disabled by default.

Lesson: Every production feature needs a kill switch.

The Security Review

Before launching, we asked three questions:

1. What if the database is breached?

Worst case: Attacker gets redaction maps (valid for 24 hours).

Mitigation:

Redaction maps are isolated (separate table)

Row-Level Security (RLS) enforced

Maps expire automatically

Even with maps, they don't have original case content (that's encrypted separately)

Verdict: Low risk (time-limited exposure, requires multiple breaches).

2. What if the AI vendor stores our data?

Worst case: Custom AI Model trains on redacted case content.

Mitigation:

Only redacted data sent (no PII)

Generic case patterns don't leak sensitive info

Our AI Models privacy policy prohibits training on API data

We can switch vendors in <1 hour (multi-model architecture)

Verdict: Very low risk (data already anonymized).

3. What if a compliance officer wants to abuse the system?

Worst case: User with access downloads 100 redaction maps to de-anonymize cases.

Mitigation:

Audit logs track every redaction map access

Rate limiting (max 10 requests/minute)

Role-based access control (only org admins can access)

Alerts for unusual activity (>20 maps accessed in 1 hour)

Verdict: Low risk (observable, rate-limited, requires elevated permissions).

Lessons From the Journey

Deploying privacy-first AI in production meant grappling with tough tradeoffs. Initial experiments with advanced name detection were set aside when they introduced latency without clear value. We learned that storing redaction mappings beyond a short window increased liability without benefit, and that feature flag systems were essential for safety and controlled release.

Security reviews shaped every iteration. Multiple safeguards ensure that—even in the worst-case scenario—no unencrypted sensitive content ever escapes our boundaries, and every action is tracked and rate-limited.

What’s Next

Our commitment to privacy doesn’t end here. New enhancements are underway for multi-language support, deeper semantic analysis, and private, air-gapped deployments for regulated industries. We’re exploring open-sourcing selected components where it fosters trust and transparency, but keeping core gateway logic proprietary to secure our unique approach.

Disclosurely’s AI Gateway now powers instant, privacy-respecting case analysis and audit-ready oversight for organizations that demand more than promises. The future of AI in compliance is designing systems where trust isn’t assumed—it’s guaranteed by design.

Interested in seeing more? Get in touch or try a demo at Disclosurely. We’re actively seeking partners, engineers, and compliance leaders who share our vision for AI that protects people first.

Try It Yourself

Disclosurely's AI Gateway is live in production. If you're a compliance officer handling sensitive cases:

Sign up at disclosurely.com

Upload a test case

Try the AI analysis with PII redaction

See the audit logs (zero sensitive data stored)

Open-Source Components (Coming Soon):

PII redaction library (TypeScript)

Feature flag system (PostgreSQL functions)

Audit logging patterns (SQL schema)

Building AI for sensitive data isn't about choosing between innovation and privacy. It's about designing systems where privacy enables innovation.

Our AI Gateway proves you can have:

✅ Instant case analysis (1-2 seconds)

✅ Zero sensitive data sent to AI vendors

✅ Complete audit trails

✅ Sub-penny costs per analysis

✅ Instant rollback capability

The future of AI in compliance isn't about trusting AI providers with your data. It's about architecting systems where trust isn't required.

That's what we built. And it's just the beginning.

Want to learn more?

📧 Email: support@disclosurely.com

🐦 Instagram: @disclosurely

💼 LinkedIn: Disclosurely

🔗 GitHub: [Coming Soon - Open Source Components]

Hiring: We're looking for people who care about privacy, compliance, and building systems that actually protect people. Join us.

Special thanks to our beta testers who trusted us with their most sensitive data, and to the compliance officers who provided feedback that shaped every design decision.